How design leadership transformed fragmented AI ideas into a credible, phased roadmap that restored enterprise confidence

![[digital project] image of a mobile device with a productivity app interface (for a productivity tools business)](https://cdn.prod.website-files.com/68235c9a6fae25c90dd07a14/696f184434f34b3d92671703_008ec11dcc29714a457f54a5c0b70334_1-1-1%20Calendar%20-%20play%20small.png)

In late 2023, Groove found itself at a familiar but risky inflection point.

AI had become a clear expectation for enterprise customers, yet the product organization’s thinking was fragmented—spread across roadmap notes, slide decks, and informal conversations. While many AI ideas already existed, there was no single, coherent way to explain how those ideas fit together, how they would show up in real seller workflows, or which bets mattered most in the near term.

The urgency escalated when one of Groove’s largest enterprise customers raised concerns about the lack of clarity in the AI roadmap ahead of an upcoming renewal. Leadership understood the risk but lacked a clear starting point. Waiting for a traditional planning cycle was not an option.

I was asked to step in and help bring clarity quickly.

Rather than treating this as a feature-definition exercise, I proposed and led a focused, cross-functional design sprint. The goal was not to invent an AI strategy under pressure, but to make an existing, implicit strategy legible—aligning leadership, product, and customer-facing teams around a credible, phased direction that could withstand enterprise scrutiny.

This case study focuses on how design leadership was used to compress alignment, force prioritization, and restore confidence at a moment when ambiguity carried real commercial risk.

In late 2023, Groove leadership was alerted to growing concern from one of our largest enterprise customers regarding the clarity and credibility of our AI roadmap. The feedback was not about a single missing feature—it reflected a broader uncertainty about how AI would meaningfully show up across the Groove experience, and whether the company had a coherent plan for enterprise-scale use cases.

The timing amplified the risk. The customer’s renewal was approaching in the spring, and there was limited runway to respond. Waiting for long-term roadmap cycles or incremental discovery would not address the immediate concern. Leadership needed to demonstrate clear thinking, prioritization, and direction well ahead of renewal conversations.

At the same time, this was not a blank-slate problem. Many of the underlying ideas—AI-assisted actions, AI-authored communications, summaries, and intelligence layered across workflows—had already been discussed across product, design, and engineering. What was missing was not intent, but a shared, organized view of how these ideas fit together, how they would deliver value to enterprise customers, and which bets mattered most in the near term.

Leadership explicitly asked me to step in and help figure out how to bring clarity quickly. The challenge was not simply to produce concepts, but to create alignment—across executives, product managers, designers, and engineers—under tight time constraints and with incomplete inputs.

The stakes were real:

The goal was not to invent a roadmap under pressure, but to rapidly synthesize existing thinking into a coherent, defensible narrative—and to do so in a way that could guide real product decisions, not just satisfy a presentation moment.

This effort began as an executive intervention, not a planned product initiative.

Leadership asked me to step in and determine how to move forward. After assessing the ambiguity, timelines, and number of stakeholders involved, I proposed running a focused design sprint as the fastest way to bring structure, shared understanding, and momentum to the problem.

The intent of the sprint was explicit:

I led the effort as a player-coach, owning both the operating model and key parts of the design work.

My responsibilities included:

While I proposed the sprint as the approach, the urgency and mandate were clear from the outset. This was an “all hands on deck” moment, and the sprint became the mechanism for turning diffuse ideas into a shared plan.

The sprint brought together a senior, cross-functional group:

Names are intentionally omitted here—the emphasis was on collective ownership and speed, not individual attribution.

We loosely followed the structure of a Google-style design sprint, adapted for enterprise product complexity and a compressed timeline.

Over roughly two weeks (with a short extension for prototype polish), the team worked through:

Importantly, not every area was explored equally. We deliberately prioritized depth over breadth—focusing most heavily on the first three concepts with the highest perceived enterprise impact.

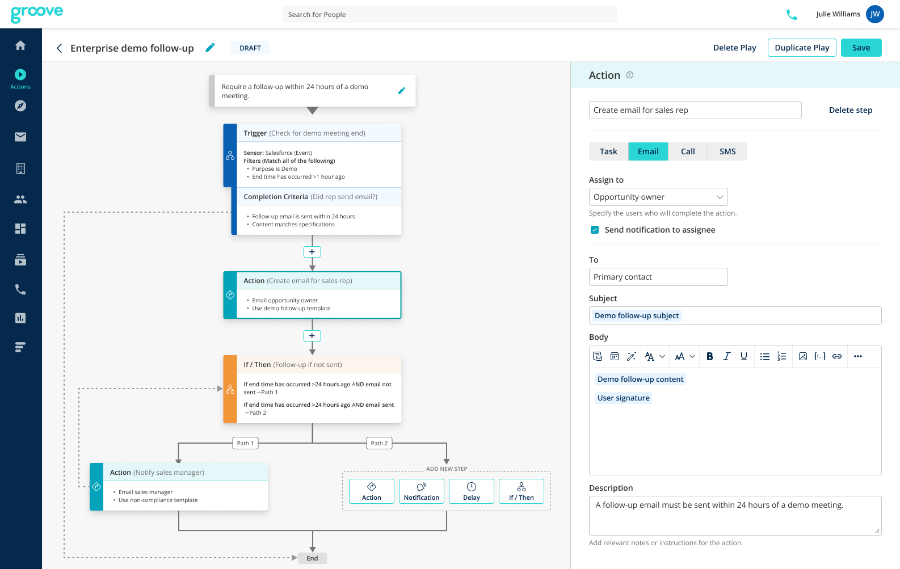

The sprint culminated in a demo-ready story flow and a high-fidelity Figma prototype covering:

These were chosen not because they were the only ideas, but because they best demonstrated how AI could show up credibly inside real Groove workflows.

The prototype was used to align leadership internally and to communicate a clear, grounded AI direction externally. Several of the concepts explored during the sprint were later shipped, validating both the prioritization and the approach.

The immediate mandate was explicit and time-bound:

Create something credible we could show an enterprise customer—quickly—that demonstrated clear thinking, prioritization, and intent around Groove’s AI direction.

This was not a request to ship production-ready features or finalize a long-term roadmap. Leadership needed a tangible artifact that could:

Crucially, the mandate was as much about communication and alignment as it was about design. The output needed to work externally—with a skeptical enterprise customer—and internally, giving executives and product teams a shared reference point for future decisions.

Early in the sprint, it became clear that the risk was not a lack of AI ideas. The risk was ambiguity:

What the product actually needed was not more ideation, but structured convergence.

To meet the mandate, the work had to:

This reframing shaped every decision in the sprint, from which concepts were explored deeply to which were intentionally left incomplete.

Because the goal was credibility under pressure—not feature delivery—success was defined by a different set of criteria than a typical product cycle.

We aligned on the sprint being successful if it produced:

The bar was not perfection. The bar was confidence—earned through clarity, structure, and realism.

Meeting these criteria would allow Groove to engage enterprise customers from a position of intent and momentum, rather than defensiveness or ambiguity, and would give the organization a durable foundation for subsequent AI investment and delivery.

With limited time and fragmented inputs, a traditional discovery or roadmap process would have failed. The problem was not a lack of ideas or effort—it was the absence of a shared frame. We needed a way to compress weeks of alignment into days without sacrificing rigor.

I proposed a focused design sprint as the operating mechanism. The goal was not speed for its own sake, but decision compression: bringing the right people into the same room, working from the same artifacts, and forcing explicit trade-offs.

The sprint was structured around three principles:

Over roughly two weeks (with a short extension to polish the prototype), we moved from fragmented inputs to a coherent, review-ready prototype that leadership could confidently stand behind.

(Using design to force clarity)

The Whimsical board functioned as the central working surface throughout the sprint. It was never intended to be customer-facing. Its purpose was alignment.

At the top, we grounded the team in AI interaction patterns and emerging best practices. This helped set shared expectations about what “good” looked like and avoided defaulting to novelty for novelty’s sake.

From there, we mapped the user journey and identified where AI could plausibly augment real Groove workflows—particularly where it intersected with Groove Plays and execution surfaces already familiar to enterprise users.

The core of the board was organized into seven AI feature areas, each owned by a product manager and explored through a consistent lens:

Importantly, the board becomes less complete from left to right. This was intentional. The diminishing fidelity visually reinforced prioritization and helped leadership understand not just what we were proposing, but what we were not committing to yet.

At the bottom of the board, we defined a single demo flow. This story flow became the backbone of the Figma prototype and the primary artifact used in leadership and customer conversations.

The value of the board was not the ideas themselves. It was the shared clarity it created—allowing a diverse group of stakeholders to reason about AI direction from the same frame and make decisions quickly without reverting to opinion or instinct.

The sprint surfaced seven viable AI feature directions, each with a clear owner, hypothesis, and interaction model. The constraint was not ideation quality—it was time, credibility, and enterprise expectations.

We prioritized AI-Prioritized Actions, AI-Written Emails, and Aggregate Summaries for three reasons:

Features to the right of the board were not deprioritized because they lacked merit. They were left intentionally incomplete because:

This trade-off was deliberate. Depth in a smaller number of features created confidence; superficial coverage across all seven would not have.

The Figma prototype was designed as a storytelling device, not a specification.

Its purpose was to answer a single question for enterprise stakeholders:

“Do you have a coherent AI plan that fits naturally into how sellers already work?”

To do that, the demo flow intentionally followed a realistic seller journey—from signal → interpretation → action—rather than jumping between isolated AI features. Each moment in the flow reinforced a few key ideas:

The prototype did not attempt to show every edge case or future state. Instead, it demonstrated conceptual integrity—how multiple AI capabilities could coexist as part of a single, evolving system.

That narrative clarity was what made the roadmap credible, not the fidelity of individual screens.

The most immediate outcome of the sprint was not feature delivery—it was clarity. Prior to this work, Groove’s AI strategy existed across scattered conversations, roadmap slides, and informal plans. The sprint consolidated that thinking into a coherent, review-ready narrative leadership could confidently stand behind.

The resulting prototype was demoed multiple times across leadership and customer-facing teams and became a central artifact in renewal discussions with a flagship enterprise customer. While positioned as a directional roadmap rather than a delivery commitment, it demonstrated that Groove had a thoughtful, phased plan for how AI would meaningfully support sales execution over time.

This shift—from fragmented intent to a unified plan—was pivotal in restoring confidence during renewal conversations.

Rather than forcing premature commitments, the work helped leadership align on sequencing:

The sprint’s prioritization—focusing deeply on AI-prioritized actions, AI-assisted email writing, and aggregate summaries—created a clear path forward. Those areas later shipped incrementally over the course of the year, validating the original prioritization without requiring rework or reframing.

The prototype functioned as a shared reference point long after the sprint concluded, reducing ambiguity and re-litigation as teams moved into delivery.

Beyond product outcomes, this work materially shifted how design showed up at a leadership level.

At a moment when senior leadership—including the CEO—was unsure where to begin, design provided the operating model. By proposing and leading a structured sprint, I turned an urgent, high-risk situation into a focused, collaborative effort that aligned product, design, engineering, and go-to-market stakeholders.

This was not about producing polished UI. It was about using design to force clarity, compress decision-making, and help the organization move forward with confidence.

This sprint did more than address an immediate enterprise concern. It changed how the organization approached AI altogether.

At an execution level, it gave leadership a concrete artifact to anchor conversations with customers—shifting discussions from abstract promises to visible intent and sequencing.

At an organizational level, it established a repeatable pattern:

Internally, it aligned product, design, engineering, and customer-facing teams around a shared understanding of what “good” looked like for AI inside Groove. Externally, it restored confidence that Groove was thinking intentionally about AI—not reactively.

Most importantly, it demonstrated how design leadership can create clarity fast when the cost of waiting is too high.